Portfolio

Experiences

IDEA Lab — Robotics Research Assistant (2023 – now)

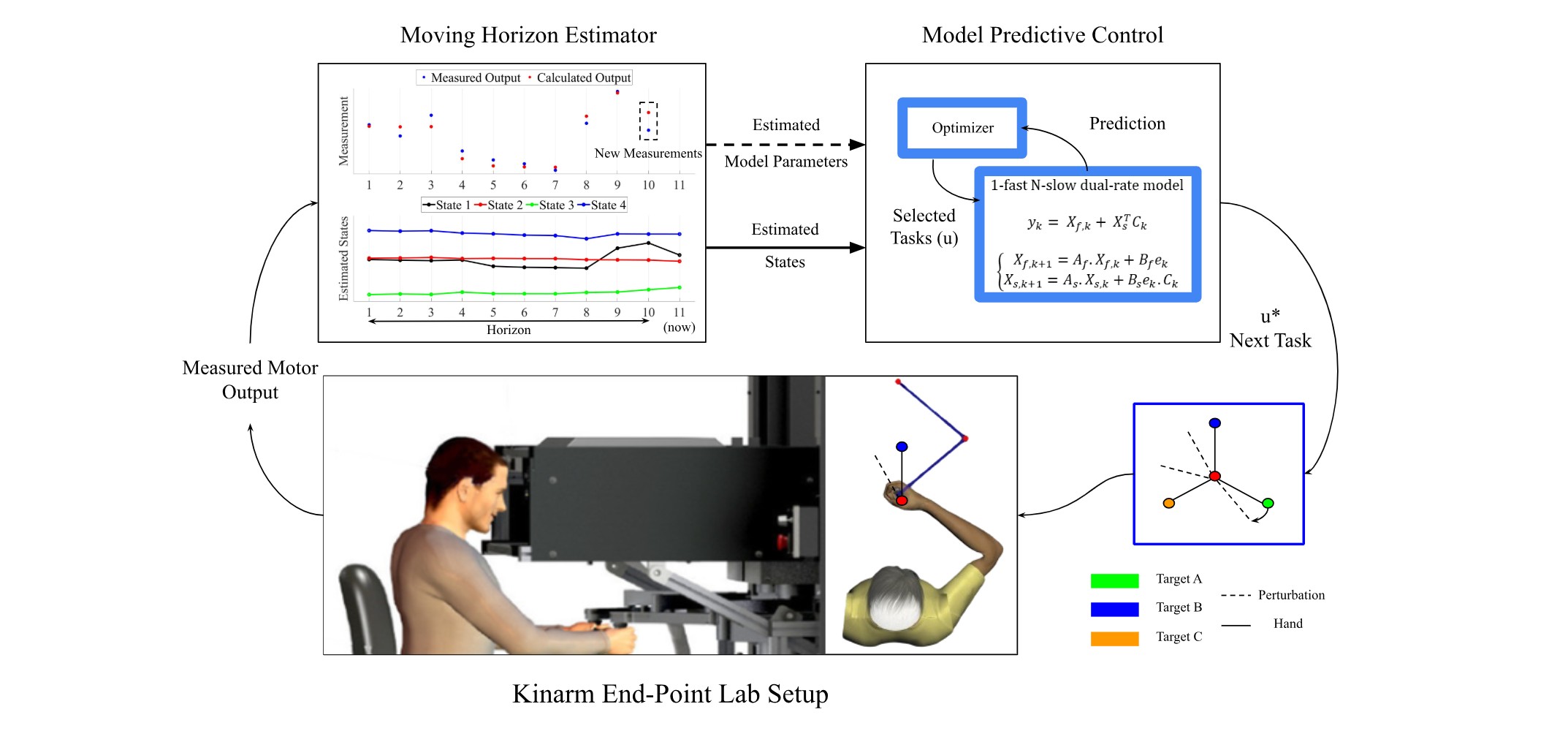

Objective: Delivering optimal personalized robotic training using online system identification and optimal control methods.

Methods: Employed Joint Extended Kalman Filter (JEKF) and Moving Horizon Estimation (MHE) for real-time state and parameter estimation of human motor learning, facilitating personalized robotic training. Developed optimal control techniques, including Model Predictive Control (MPC) and Linear Quadratic Regulator (LQR) methods, to enable real-time, personalized task scheduling in robotic training.

Outcome: Derived individualized motor learning models and optimized training schedules in real-time for each participant. Derived individualized motor learning models and optimized training schedules in real-time for each participant. Participants trained under this adaptive framework demonstrated higher learning rates and superior long-term retention compared to the control.

Coding: MATLAB/Simulink, C++, Python.

Robotic Systems: Kinarm (BKIN Technologies Ltd., Canada), Quanser 2D planar robot (Quanser, Canada).

Institute of Mechanism Theory, Machine Dynamics and Robotics (IGMR) — Robotics Research Assistant (summer 2025)

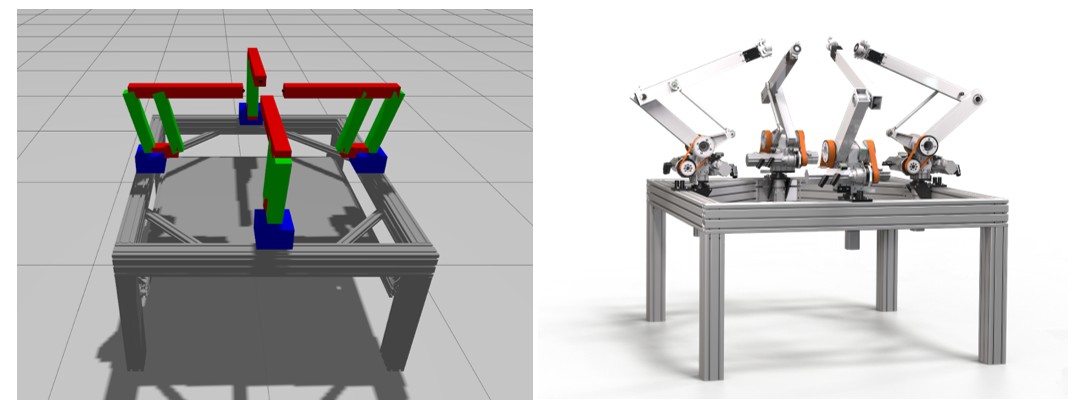

Objective: Develop a custom ROS 2 controller for the Paragrip parallel robot, integrated with the ROS 2 Control and Gazebo simulation environments. The controller enables real-time trajectory execution using resolved-rate motion control and compensates for object drift through real-time sensor feedback.

Outcome: Successfully designed, implemented, and tested a fully functional ROS 2 controller capable of executing joint trajectories in real time. The controller correctly handles position and velocity command/state interfaces, supports multi-arm operation, and compensates for object drift via real-time sensor feedback during motion.

Coding & Tools: C++, Python, ROS 2, GitLab, Gazebo, RViz.

Robotic System: Paragrip Parallel Robot.

Neuromuscular Control & Biomechanics Lab (NCBL) — Machine Learning Research Assistant (2023 – 2025)

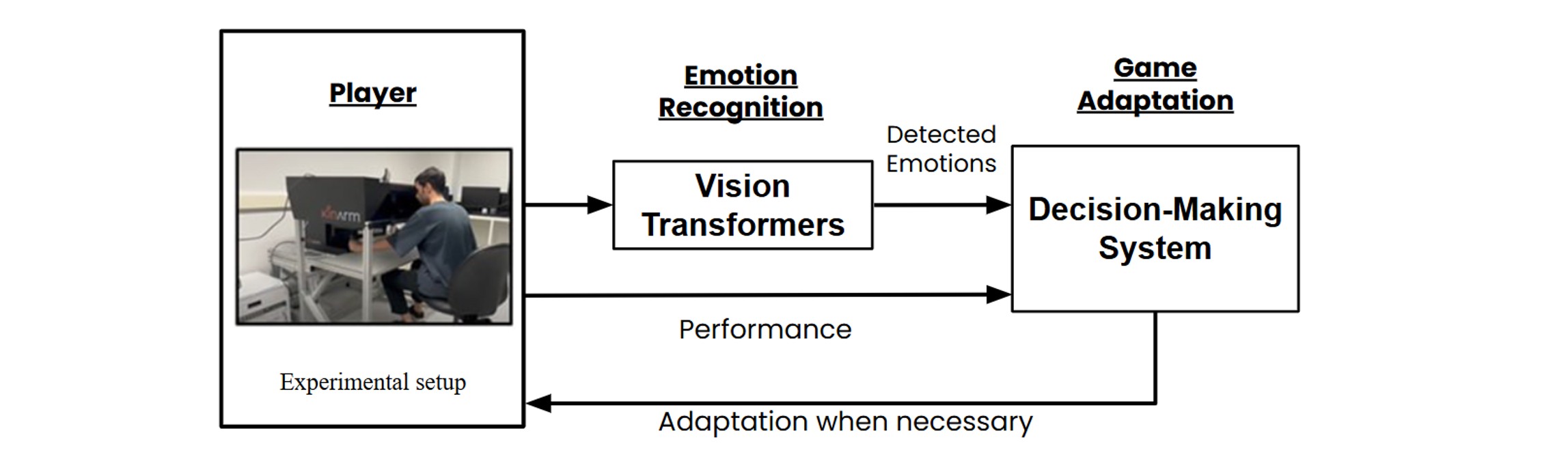

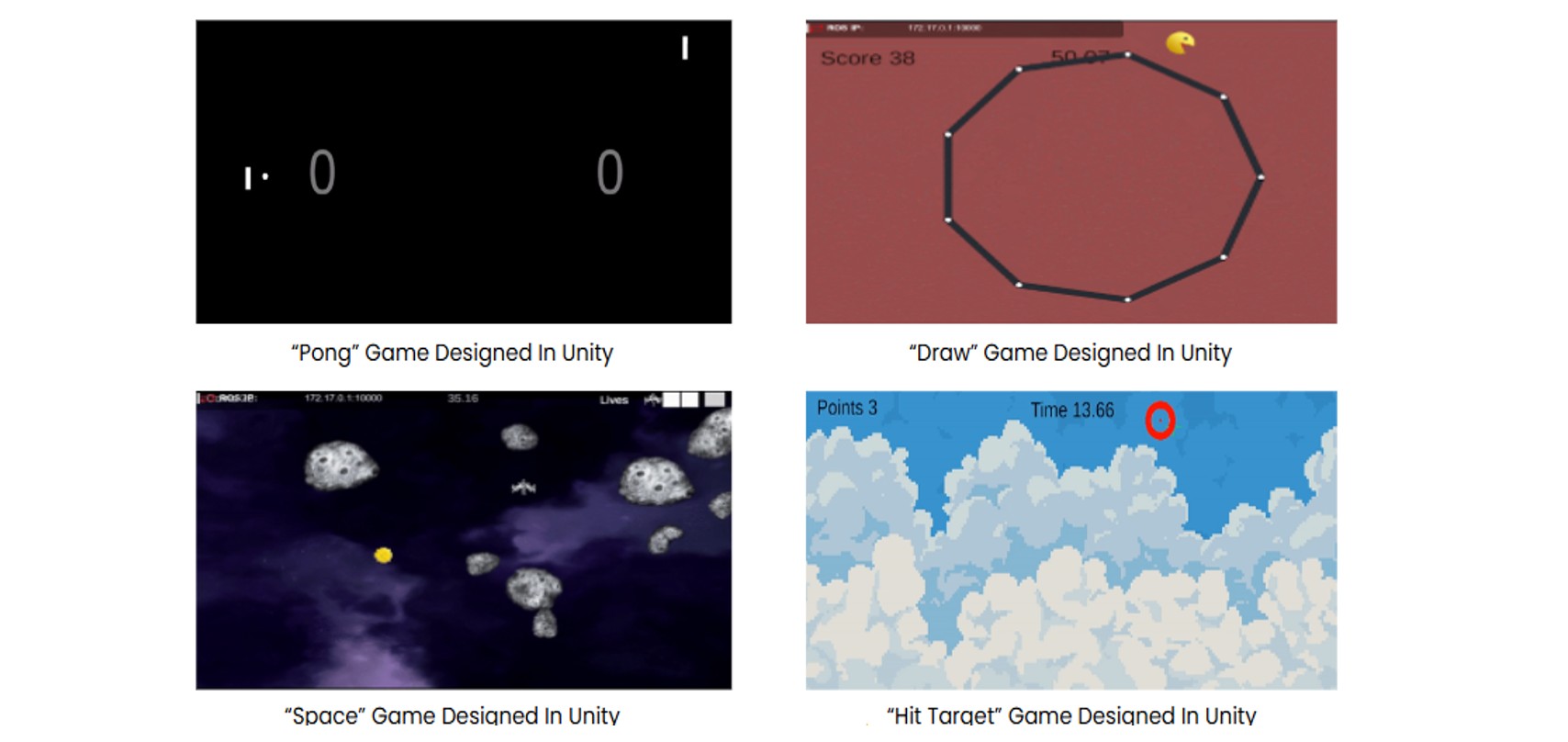

Objective: The project aims to create an adaptive, performance-driven game environment in Unity to evaluate and enhance users' motor skills. It includes a real-time emotion-aware system, allowing the game to respond dynamically to players' emotional states, and employs advanced recommender systems to adjust game difficulty based on performance and emotional feedback.

Outcome: Four distinct games - Spaceship, Pong, Draw, and Hit Target - were successfully developed to assess and improve users' motor skills through interactive games. The implementation of real-time emotion recognition using vision transformers allowed for accurate classification of players' emotions, enabling responsive adaptations that enhance user engagement. Furthermore, a sophisticated recommendation system was created using fuzzy logic, reinforcement learning, and LLMs to dynamically adjust game difficulty in response to player performance and emotions, resulting in a highly personalized and immersive gaming experience.

Coding: C#, Python, Unity.

Sensors: Shimmer3 GSR, Depth Camera

Robotic System: Kinarm (BKIN Technologies Ltd., Canada).

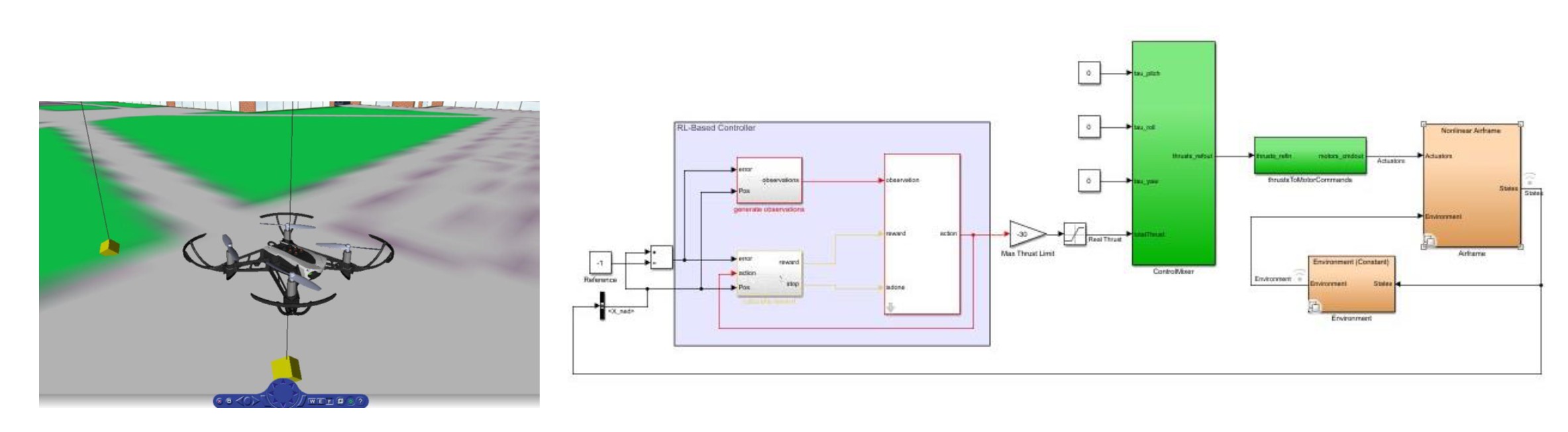

Advanced Robotics and Automated Systems Lab (ARAS) — Control Systems Research Assistant (2021 – 2023)

Objective: To design and optimize a robust control system for a quadrotor by developing an accurate dynamic model, implementing enhanced PID control strategies, and leveraging reinforcement learning techniques to improve adaptability in altitude control.

Outcome: An accurate quadrotor dynamic model was developed and validated using Newton-Euler and Lagrange equations, ensuring precise system representation. Six PID controllers with optimized coefficients were implemented to significantly improve system stability and performance. Additionally, a reinforcement learning agent utilizing the Deep Deterministic Policy Gradient (DDPG) algorithm was developed, resulting in enhanced adaptability and responsiveness to environmental changes.

Selected Projects

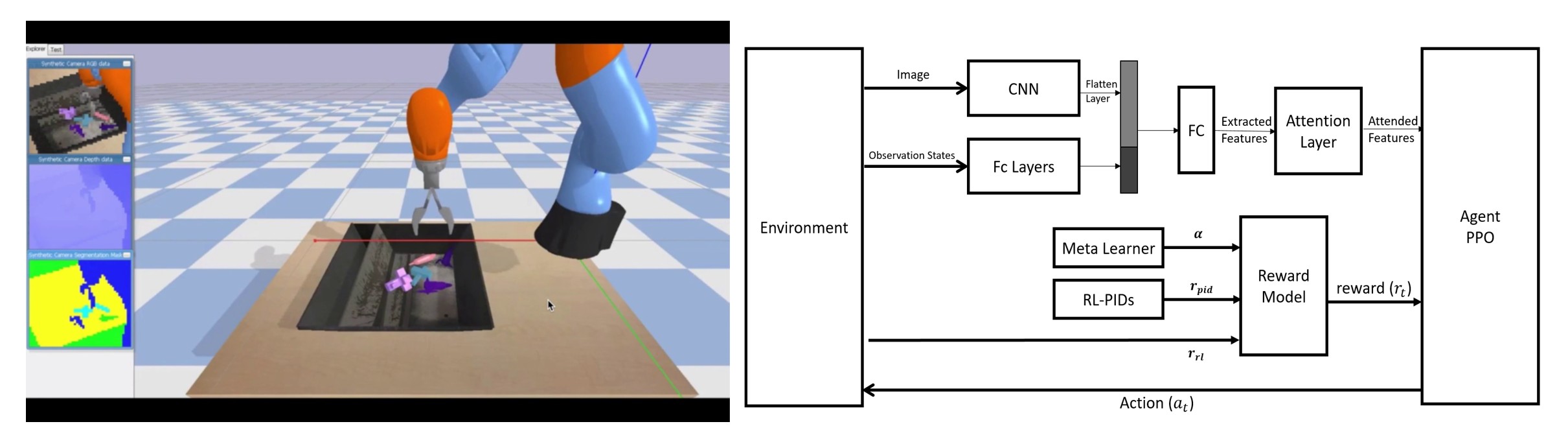

Reinforcement Learning-based Controller for Object Grasping

- Developed a reinforcement learning agent based on the PPO algorithm for grasping objects with the Kuka arm in PyBullet

- Enhanced sample efficiency using PID controller feedback in the reward function

- Improved performance using a meta-learning agent and attention layers

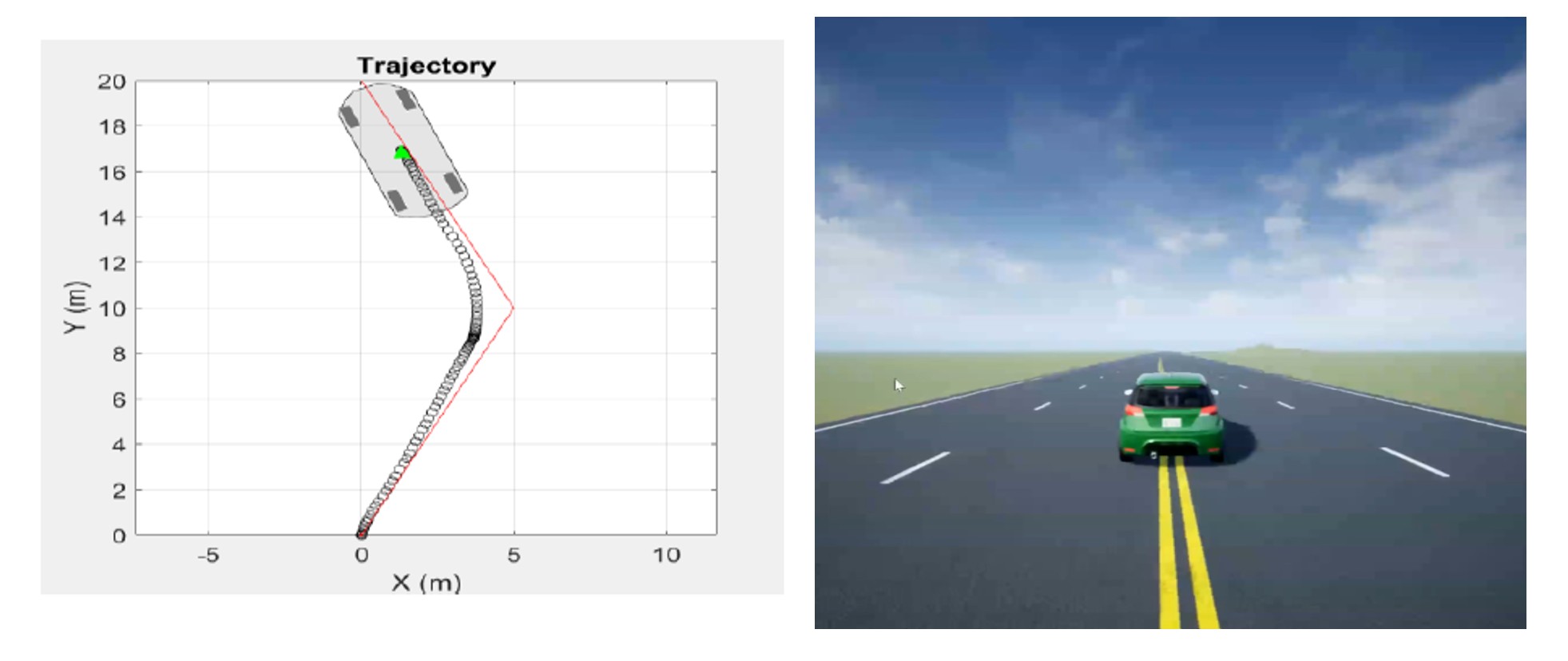

Design of data-driven MPC controller for vehicle path tracking

- Excited the system with various signals and collected data to apply machine learning techniques to model the system dynamics (3DOF Vehicle Bicycle Model with Force Input in MATLAB)

- Linearized the model using the ARX function and developed a linear MPC controller with constraints

- Designed a nonlinear MPC controller incorporating the NARX model and considering constraints to perform trajectory tracking with minimum change in the vehicle yaw rate

Human Activity Recognition (HAR)

- Dimension reduction and pre-processing of IMU data collected via a smartphone

- Employed DNN, GRU, and bidirectional GRU to classify human activities, such as walking, sitting, etc.

- Optimized network hyperparameters (learning rate, neuron numbers, etc.) using Grid Search and GA

Human motion analysis with motion capture system and force plate data

- Processed, analyzed, and visualized the motion capture system and force plate data for the lower body of a number of participants walking in a human movement laboratory

- Calculated kinematics and inverse dynamics to find motion, forces, moments, and powers of ankle, knee, and hip joints